i’ve always assumed that whatever meat didnt pass qc for human canned tuna would just become cat food.

i’ve always assumed that whatever meat didnt pass qc for human canned tuna would just become cat food.

wondered why your pet might not like particular foods?

No. It’s the same reason that you don’t like particular perfectly good foods. They’re attuned to different factors, but it’s the same process to appeal to them.

i worked at an animal hospital for a few years in my 20s (late 90s). I was also broke af punk kid living in a filthy punk rock house, barely able to afford my part of rent. So i’d bring home the pet food sometimes. It wasn’t really inventoried, and it’s nutrition. Do not recommend though, its a great way to get a bacterial gut infection since pet food regulations are very minimal.

it ranges. some cat food is indistinguishable from canned tuna. the science diet I/D canine prescription tastes exactly like canned corned beef hash. the cheap stuff (kibbles&bits, fancy feast, etc) tastes exactly like you’d expect: bone meal, corn starch, and ash slag. cause thats the filler trash the cheap stuff is made of.

generally though, most kibble just tastes like if you soaked grape nuts cereal in beef broth, and most wet food tastes about the same as canned horse. which is unpleasant.

The answer to your overarching question is not “common maintenance procedures”, but “change management processes”

When things change, things can break. Immutable OSes and declarative configuration notwithstanding.

OS and Configuration drift only actually matter if you’ve got a documented baseline. That’s what your declaratives can solve. However they don’t help when you’re tinkering in a home server and drifting your declaratives.

I’m pretty certain every service I want to run has a docker image already, so does it matter?

This right here is the attitude that’s going to undermine everything you’re asking. There’s nothing about containers that is inherently “safer” than running native OS packages or even building your own. Containerization is about scalability and repeatability, not availability or reliability. It’s still up to you to monitor changelogs and determine exactly what is going to break when you pull the latest docker image. That’s no different than a native package.

Just cause you’ve never seen them doesn’t make it not true.

Try using quadlet and a .container file on current Debian stable. It doesn’t work. Architecture changed, quadlet is now recommended.

Try setting device permissions in the container after updating to Debian testing. Also doesn’t work the same way. Architecture changed.

Redhat hasn’t ruined it yet, but Ansible should provide a pretty good idea of the potential trajectory.

It isn’t. It’s architecture changes pretty significantly with each version, which is annoying when you need it to be stable. It’s also dominated by Redhat, which is a legit concern since they’ll likely start paywalling capabilities eventually.

Every complaint here is PEBKAC.

It’s a legit argument that Docker has a stable architecture while podman is still evolving, but that’s how software do. I haven’t seen anything that isn’t backward compatible, or very strongly deprecated with notice.

Complaining about selinux in 2024? Setenforce 0, audit2allow, and get on with it.

Docker doing that while selinux is enforcing is an actual bad thing that you don’t want.

The actual answer to OP’s question is to look up cognitive biases, and to eventually realize that “black” isn’t the relevant descriptor here.

Like seriously and I’m not even intending to be racist

(Though some smarmy asshole will for sure post this unironically thinking that they’re not being racist.)

Google the concept of an escrow service.

Free tier is super limited and super easy to accidentally break out of. I had a single file in S3, but because my logging settings were wrong, I broke the free tier with junk logs.

The t2 micro ec2 instances are fine, but you need to be very careful about their storage and network egress.

Best use I’ve had for AWS that has managed to stay within the free limits has been Lambda. Managed to convert a couple self hosted discord bots to a few Lambda functions, works great. Plugging it into CloudFormation and tying up CI/CD with CodePipeline and the like were overkill but good learning exp.

I don’t think there’s any ECS free tier, but you can fit a private container repository in the free S3 limits as well.

Yup. Treating VMs similar to containers. The alternative, older-school method is cold snapshots of the VM, apply patches/updates (after pre-prod testing & validation), usually in an A/B or red/green phased rollout, and roll back snaps when things go tits up.

deleted by creator

If you are in a position to ask this question, it means you have no actual uptime requirements, and the question is largely irrelevant. However, in the “real” world where seconds of downtime matter:

Things not changing means less maintenance, and nothing will break compatibility all of the sudden.

This is a bit of a misconception. You have just as many maintenance cycles (e.g. “Patch Tuesdays”) because packages constantly need security updates. What it actually means is fewer, better documented changes with maintenance cycles. This makes it easier and faster to determine what’s likely to break before you even enter your testing cycle.

Less chance to break.*

Sort of. Security changes frequently break running software, especially 3rd party software that just happened to need a certain security flaw or out-of-date library to function. The world has got much better about this, but it’s still a huge headache.

Services are up to date anyway, since they are usually containerized (e.g. Docker).

Assuming that the containerized software doesn’t need maintenance is a great way to run broken, insecure containers. Containerization helps to limit attack surfaces and outage impacts, but it isn’t inherently more secure. The biggest benefit of containerization is the abstraction of software maintenance from OS maintenance. It’s a lot of what makes Dev(Sec)Ops really valuable.

Edit since it’s on my mind: Containers are great, but amateurs always seem to forget they’re all sharing the host kernel. One container causing a kernel panic, or hosing misconfigured SHM settings can take down the entire host. Virtual machines are much, much safer in this regard, but have their own downsides.

And, for Debian especially, there’s one of the biggest availability of services and documentation, since it’s THE server OS.

No it isn’t. THE server OS is the one that fits your specific use-case best. For us self-hosted types, sure, we use Debian a lot. Maybe. For critical software applications, organizations want a vendor so support them, if for no other reason than to offload liability when something goes wrong.

It is running only rarely. Most of the time, the device is powered off. I only power it on a few times per month when I want to print something.

This isn’t a server. It’s a printing appliance. You’re going to have a similar experience of needing updates with every power-on, but with CoreOS, you’re going to have many more updates. When something breaks, you’re going to have a much longer list of things to track down as the culprit.

And, last but not least, I’ve lost my password.

JFC uptime and stability isn’t your problem. You also very probably don’t need to wipe the OS to recover a password.

My Raspberry Pi on the other hand is only used as print server, running Octoprint for my 3D-printer. I have installed Octoprint there in the form of Octopi, which is a Raspian fork distro where Octoprint is pre-installed, which is the recommended way.

That is the answer to your question. You’re running this RPi as a “server” for your 3d printing. If you want your printing to work reliably, then do what Octoprint recommends.

What it sounds like is you’re curious about CoreOS and how to run other distributions. Since breakage is basically a minor inconvenience for you, have at it. Unstable distros are great learning experiences and will keep you up to date on modern software better than “safer” things like Debian Stable. Once you get it doing what you want, it’ll usually keep doing that. Until it doesn’t, and then learning how to fix it is another great way to get smarter about running computers.

E: Reformatting

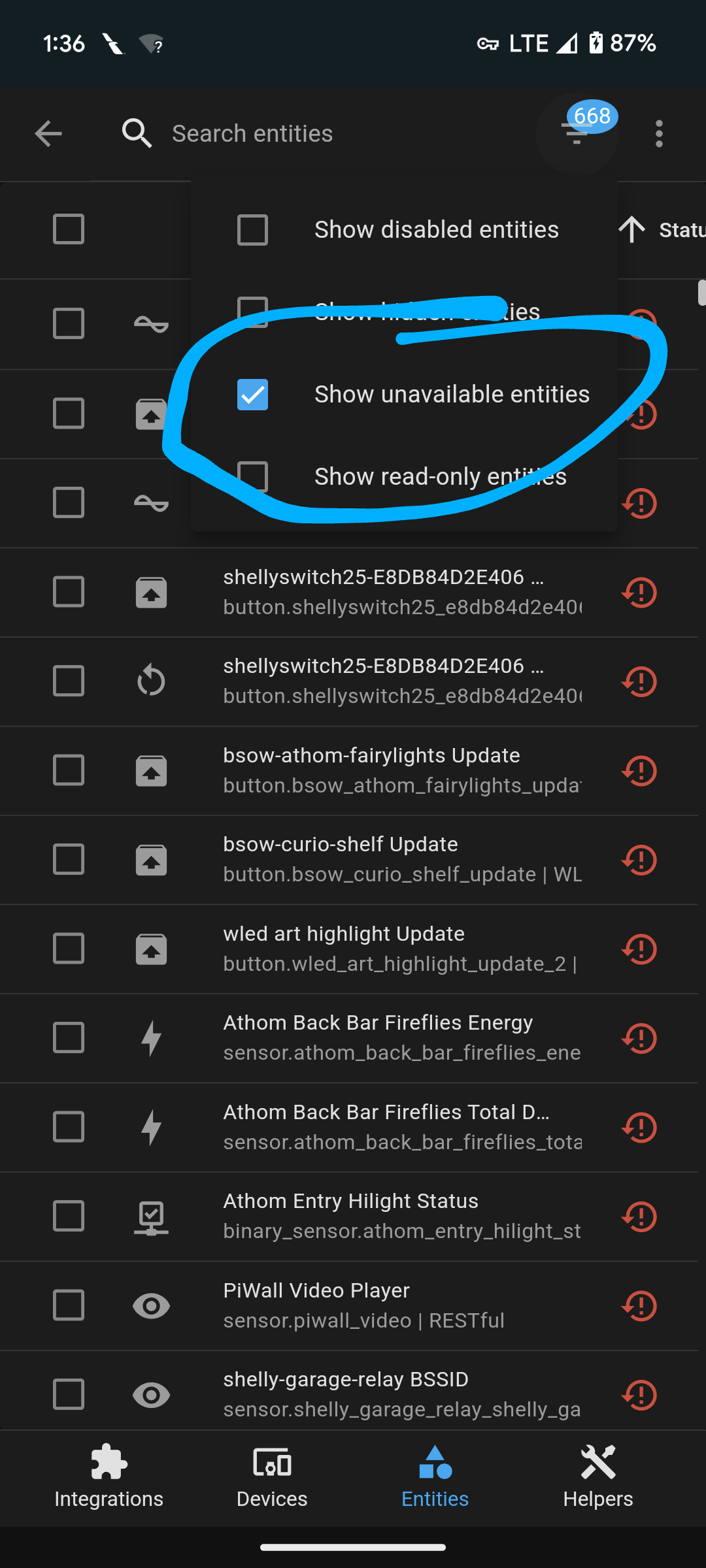

Fair enough, just seems like a lot of work vs. 2 clicks on the filters.

You don’t need to ground your Shelly if the circuit is otherwise properly grounded. The Shelly will fail open if something internal shorts.

Per the rest of the discussion re: hot wire loops to switches with no neutral or ground, just put the Shelly into the upstream junction box. (Wherever the switch wire branches from the circuit. Usually that’s where the light is.)

… Why? They exist for a reason, the interface has filter and sort options.

Conversely, as a system engineer that is involved in the hiring process for software development in addition to various types of platform and cloud engineering jobs.

I look for, in order of importance:

That’s it. College degree isn’t even considered, but if you got relevant experience in college that can count.

Most of my interview time is spent digging into technical details to see if you can back up your resume claims. The rest is getting an idea of how you approach challenges and think about things.

As far as certifications, they’re often required to get in the door due to qualification regulations. Especially security certs. If you list them, I’ll ask a few questions just to make sure you actually know what’s up.

Depends on your specific VPN, but look for a feature or setting called “split tunnel.” It should create a separate non-vpn route for the local network.

Usually client-side setting, but not always if the tunnel is built on connection.

lol what a weird take. all the problems of overconsumption and ecosystem collapse aside, theres not much inherently worse about seafood than landfood.

cats arent more picky than us. they gladly eat all kinds of trash and raw dead meat. they’re picky about what we feed them. The respective tolerance for “toxins” between us and cats is, again, relative to the environment we put them in and the specific set of toxins.